Amazon S3: Common CLI Commands & Reference

Last updated:- Download file from bucket

- Download folder and subfolders recursively

- Delete folder in bucket

- Upload file to bucket

- Upload folder to bucket

- Upload multiple files

- View bucket information

- Download part of large file from S3

- Download file via "Requester pays"

S3 is one of the most widely used AWS offerings.

| aws s3 | aws s3api |

|---|---|

| For simple filesystem operations like mv, ls, cp, etc | For other operations |

After installing the AWS cli via pip install awscli,

you can access S3 operations in two ways: both

the s3 and the s3api commands are installed.

Download file from bucket

cp stands for copy; . stands for the current directory

$ aws s3 cp s3://somebucket/somefolder/afile.txt .

Download folder and subfolders recursively

Useful for downloading log folders and things of that nature.

$ aws s3 cp s3://somebucket/somefolder/ . --recursive

Delete folder in bucket

(along with any data within)

$ aws s3 rm s3://somebucket/somefolder --recursive

Upload file to bucket

Don't forget to add

/to the end of the folder name!

$ aws s3 cp /path/to/localfile s3://somebucket/somefolder/

Upload folder to bucket

Don't forget to add

/to the end of the folder name!

Use --recursive, make sure to add the folder name to the target too

EXAMPLE move local folder to the root of a bucket

$ aws s3 cp somefolder/ s3://somebucket/somefolder/ --recursive

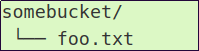

Before

Before

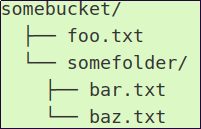

After

After

Upload multiple files

See my post on linux find examples for more ways to use

find

Say, for instance, you have multiple files that you want to upload to the same bucket. If they all have names

$ ls

aa ab ac ad ae

$ find . -name "x*" | xargs -I {} aws s3 cp {} s3://somebucket/somebucket

Another way is to just move all files you want into a directory and then uploading all files in that directory using the --recursive parameter.

View bucket information

View stats such as total size and number of objects.

$ aws s3 ls s3://somebucket/path/to/directory/ --summarize --human-readable --recursive

sample output (some rows ommited)

2015-08-24 23:11:03 40.0 MiB news.en-00098-of-00100 2015-08-24 23:11:04 39.9 MiB news.en-00099-of-00100 Total Objects: 100 Total Size: 3.9 GiB

Download part of large file from S3

Use s3api get-object with --range "pattern".

EXAMPLE: download only the first 1MB (1 million bytes) from a file located under s3://somebucket/path/to/file.csv

$ aws s3api get-object --range "bytes=0-1000000" --bucket "somebucket" --key "path/to/file.csv" output

Download file via "Requester pays"

Use s3api get-object with --request-payer requester

Many datasets and other large files are available via a requester-pays model. You can download the data but you have to pay for the transaction.

One example is the IMDB website; they have recently made their datasets available via S3.

EXAMPLE: To download one of the IMDB files, use --request-payer requester to signal that you know that you are going to be charged for it:

$ aws s3api get-object --bucket imdb-datasets --key documents/v1/current/name.basics.tsv.gz --request-payer requester name.basics.tsv.gz

References

To install AWS CLI you need a valid AWS account, see more information on installing and configuring the AWS CLI