Paper Summary: Self-instruct: Aligning Language Models with Self-generated Instructions

Last updated:Please note This post is mainly intended for my personal use. It is not peer-reviewed work and should not be taken as such.

WHAT

A way to use a vanilla LLM to generate instruction-tuning data to fine-tune itself.

WHY

Because human-annotated datasets are expensive to come by.

HOW

1) Use the pre-trained LLM itself to generate input/output instruction pairs, from a small set of seed pairs (one seed example per task, 175 examples in total).

2) Perform supervised fine-tuning with the pairs from step 1), using heuristics to classify which outputs are better than others.

CLAIMS

- In one experiment, GPT3self-instruct hits 44.4% of correct answers while InstructGPT (GPT3 aligned with RLHF) hits 50.7%.

NOTES

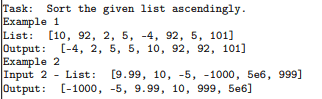

- All tasks are represented in the form

(task definition, input/output pairs). It's a versatile way to represent any kind of task. Example below:

- No need to host a local version of GPT3. Everything was done using OpenAI CLI tools and the API

MY 2¢

A small set (175 samples) of human-labelled instances is used to "bootstrap" the process.

It isn't clear how the fine-tuning was done. Was it just SFT, RLHF or something else? The authors just say that they used the OpenAI Fine-tuning API.

References

1:Such as InstructGPT/ChatGPT which are based on RHLF

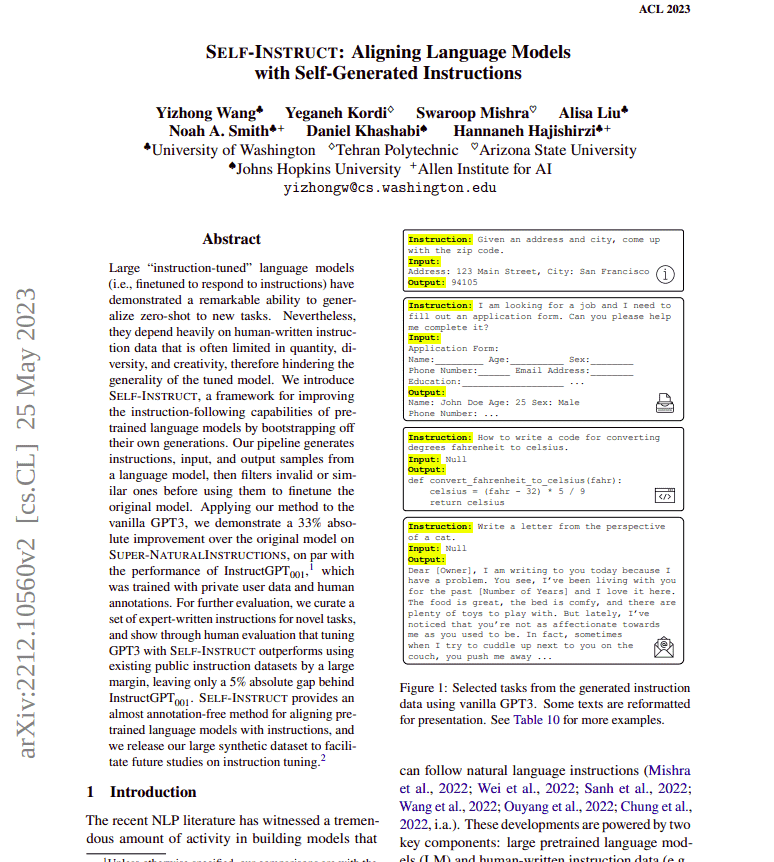

Self-Instruct: Aligning Language Models with Self-Generated Instructions

Self-Instruct: Aligning Language Models with Self-Generated Instructions How the authors represent the instruction tasks to align the model.

How the authors represent the instruction tasks to align the model.