Setup Keras+Theano Backend and GPU on Ubuntu 16.04

Last updated:

- See if your Nvidia card is CUDA-enabled

- Install numpy and scipy with native BLAS linkage

- Install Keras and Theano and link BLAS libraries

- Install NVIDIA driver

- Install CUDA toolkit version 8.0

- Test the installation

- Test Theano with GPU

- Troubleshooting installation

- Failed to fetch file: /var/cuda-repo-8-local/Release

- ERROR (theano.sandbox.cuda): nvcc compiler not found on $PATH. Check your nvcc installation and try again

- ERROR (theano.sandbox.cuda) Failed to compile cuda_ndarray.cu: libcublas.so.8.0: cannot open shared object file: No such file or directory

- modprobe: ERROR: could not insert 'nvidia_uvm': Unknown symbol in module

- ERROR (theano.sandbox.gpuarray): pygpu was configured but could not be imported

- ERROR: Installation failed: using unsupported compiler

- ERROR: error: ‘memcpy’ was not declared in this scope

- Other tips

Using GPUs to process tensor operations is one of the main ways to speed up training of large, deep neural networks.

Here are a couple of pointers on how to get up and running with Keras and Theano on a clean 16.04 Ubuntu machine, running an Nvidia graphics card.

See if your Nvidia card is CUDA-enabled

Install numpy and scipy with native BLAS linkage

$ sudo apt-get install libopenblas-dev liblapack-dev gfortran python-numpy python-scipy

Install Keras and Theano and link BLAS libraries

create a new virtualenv using system packages:

$ virtualenv --system-site-packages -p python2.7 venv2 $ source venv2/bin/activateinstall theano and keras

(venv2) $ pip install Theano (venv2) $ pip install Keras (venv2) $ pip install Cython

Install NVIDIA driver

In order to use the toolkit, you must install the proprietary NVIDIA driver.

This can be done running the following two commands:

$ sudo apt-get install xserver-xorg-video-intel

$ sudo nvidia-installer --update

Install CUDA toolkit version 8.0

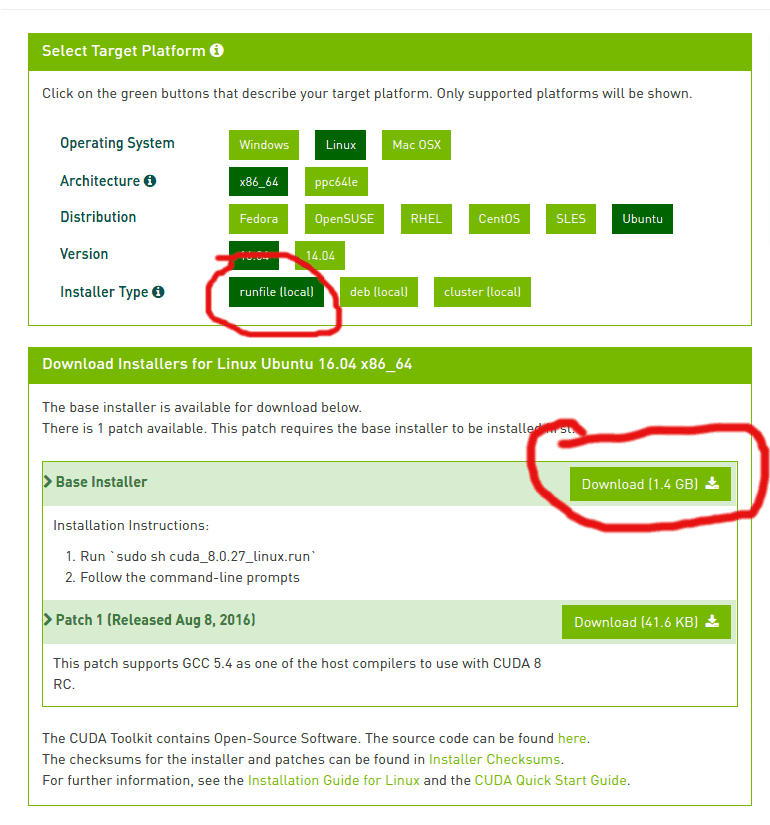

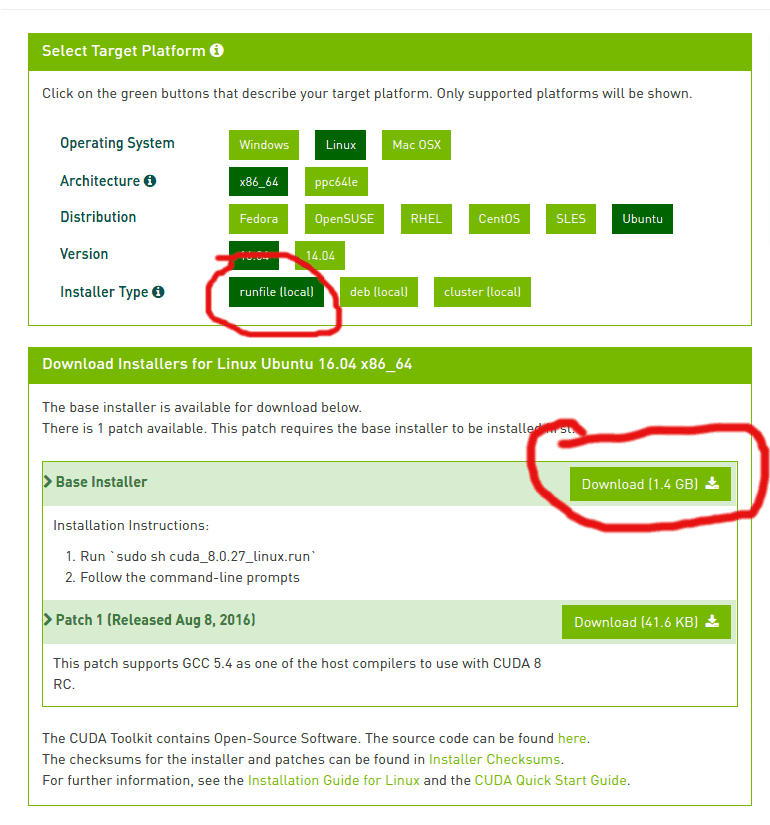

Version 8 is the most recent version (as of this writing) for ubuntu 16.04.

Go to this link: NVIDIA - CUDA Downloads and look a link for CUDA Toolkit 8.

Right now, at the time this post was being written,

you must register as a developer to be able to download the most recent toolkitYou don't need to register anymore. It looks like this: As of this post, click this link to access CUDA Toolkit 8

As of this post, click this link to access CUDA Toolkit 8

Once you've registered,you'll see the downloads page for CUDA Toolkit 8. Download the .run file: CUDA Toolkit 8

CUDA Toolkit 8

Before running the .run file, you must shut down X:

$ sudo service lightdm stopAfter shutting down X, hit

Ctrl+Alt+F1(orF2,F3and so on) and log in again.Go to the directory where the .run file was downloaded and run the following command to run the installer:

$ sudo sh cuda_8.0.27_linux.run

Test the installation

Build the sample files

Go to your home directory, extract the sample CUDA files there and build them using

make:$ cd ~/ $ /usr/local/cuda-8.0/bin/cuda-install-samples-8.0.sh . $ cd NVIDIA_CUDA-8.0_Samples/ $ makeRun one of the files you built, called deviceQuery

(You should see output that resembles the one below)

$ cd NVIDIA_CUDA-8.0_Samples/bin/x86_64/linux/release $ ./deviceQuery ./deviceQuery Starting... CUDA Device Query (Runtime API) version (CUDART static linking) Detected 1 CUDA Capable device(s) Device 0: "GeForce GT625M" CUDA Driver Version / Runtime Version 8.0 / 8.0 ... (SOME LINES OMMITTED) ... deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = GeForce GT625M Result = PASSGood. This means your GPU was identified and can be used. Now let's get it working on Theano.

Test Theano with GPU

Download this python script Theano Testing with GPU. I'll call it

TheanoGPU.py.Run it while in the same virtualenv you have used at the beginning of the tutorial, using these extra parameters:

note the extra shell parameters you need before the

pythoncommand$ THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32 python TheanoGPU.py Using gpu device 0: GeForce GT625M (CNMeM is disabled, cuDNN not available) [GpuElemwise{exp,no_inplace}(<CudaNdarrayType(float32, vector)>), HostFromGpu(GpuElemwise{exp,no_inplace}.0)] Looping 1000 times took 0.765961 seconds Result is [ 1.23178029 1.61879349 1.52278066 ..., 2.20771813 2.29967761 1.62323296] Used the gpuCongratulations! You've successfully linked Keras (Theano Backend) to your GPU! The script took only 0.765 seconds to run!

Optional if you want to compare GPU performanace against a regular CPU, you just need to adjust one parameter to measure the time this script takes when run on a CPU:

$ THEANO_FLAGS=mode=FAST_RUN,device=cpu,floatX=float32 python TheanoGPU.py [Elemwise{exp,no_inplace}(<TensorType(float32, vector)>)] Looping 1000 times took 37.526567 seconds Result is [ 1.23178029 1.61879337 1.52278066 ..., 2.20771813 2.29967761 1.62323284] Used the cpuThat took 37 seconds. Nearly 50 times as slow as the GPU version!

You will probably experience even greater gains with a better GPU (mine isn't very good, by far)

Troubleshooting installation

Lots of things can and will go wrong during this installation. These are some commong issues you may find and how to work around them:

Failed to fetch file: /var/cuda-repo-8-local/Release

This may happen if you download and install the .deb file. The easiest way to circumvent this is to just use the .run file instead.

ERROR (theano.sandbox.cuda): nvcc compiler not found on $PATH. Check your nvcc installation and try again

Open file ~/.theanorc add edit the path to CUDA root:

[nvcc]

flags=-D_FORCE_INLINES

[cuda]

root = /usr/local/cuda-8.0

ERROR (theano.sandbox.cuda) Failed to compile cuda_ndarray.cu: libcublas.so.8.0: cannot open shared object file: No such file or directory

Add the following environment variables to /etc/environment and then reboot:

PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/usr/local/cuda-8.0/bin"

CUDA_HOME=/usr/local/cuda-8.0

LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64

modprobe: ERROR: could not insert 'nvidia_uvm': Unknown symbol in module

If you get this error message, look at the output of dmesg to see if there's anything interesting. You may get a message telling you what's wrong. For instance:

[ 226.629034] NVRM: API mismatch: the client has the version 361.77, but

NVRM: this kernel module has the version 304.131. Please

NVRM: make sure that this kernel module and all NVIDIA driver

NVRM: components have the same version.

In my case, this was due to my having an old 304 driver lying around. All I had to do was to purge the package (sudo apt-get purge nvidia-304*) and the error message went away.

ERROR (theano.sandbox.gpuarray): pygpu was configured but could not be imported

Install libgpuarray and pygpu, as per this link: Theano: Libgpuarray Installation

ERROR: Installation failed: using unsupported compiler

Add --override to the command where you execute the downloaded .run file, e.g.:

$ sudo sh cuda_8.0.27_linux.run --override

ERROR: error: ‘memcpy’ was not declared in this scope

This may happen when you try to compile the examples in the toolkit (see chapter 6 of the Guide for the Toolkit)

Open file NVIDIA_CUDA-8.0_Samples/6_Advanced/shfl_scan/MakeFile and add the following line to line 149:

... previous lines ommitted

144 else ifeq ($(TARGET_OS),android)

145 LDFLAGS += -pie

146 CCFLAGS += -fpie -fpic -fexceptions

147 endif

148

149 # custom added line below

150 CCFLAGS += -D_FORCE_INLINES

151

152 ifneq ($(TARGET_ARCH),$(HOST_ARCH))

153 ifeq ($(TARGET_ARCH)-$(TARGET_OS),armv7l-linux)

154 ifneq ($(TARGET_FS),)

... next lines ommitted

Other tips

Enabling GPU when running jupyter notebook

Simply prefix the jupyter notebook command with the flags, e.g.

$ THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32 jupyter notebook

See also

Theano Docs - Easy installation of Optimized Theano on Ubuntu

SO: How can I force 16.04 to add a repository even if it isn't considered secure enough

SO: Graphics issues After installing Ubuntu 16.04 with NVIDIA Graphics

NVIDIA: Installation Guide for the CUDA Toolkit 8.0 (requires free registration)

As of this post, click this link to access CUDA Toolkit 8

As of this post, click this link to access CUDA Toolkit 8

CUDA Toolkit 8

CUDA Toolkit 8