Spark SQL Case/When Examples

Last updated:

Table of Contents

Spark version 2.4.3 used

All code available on this jupyter notebook

Case when/otherwise

Template:

df.withColumn("new_column_name",

when(<column_condition>, <value_when_true>).otherwise(<value_when_false>))

Example:

import org.apache.spark.sql.functions.when

val df = Seq(

("notebook","2019-01-19"),

("notebook", "2019-01-10"),

("small_phone", "2019-01-15"),

("small_phone", "2019-01-30"),

("tv_set", "2019-01-22"),

("large_phone", "2019-01-30"),

("medium_phone", "2019-01-22")

).toDF("device", "purchase_date")

// derived column that marks whether the device

// ends in "phone"

df.withColumn("is_phone",when($"device".endsWith("phone"),true).otherwise(false))

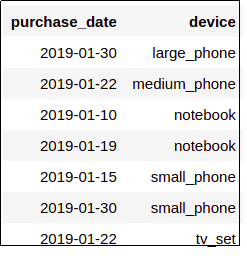

Source dataframe

Source dataframe

Colum

Colum is_phone was created based off the device column

Case when without otherwise

If you don't provide an otherwise value, it will be null:

Example: Generate new column to say what type of phone it is

import org.apache.spark.sql.functions.{element_at,split,when}

val df = Seq(

("notebook","2019-01-19"),

("notebook", "2019-01-10"),

("small_phone", "2019-01-15"),

("small_phone", "2019-01-30"),

("tv_set", "2019-01-22"),

("large_phone", "2019-01-30"),

("medium_phone", "2019-01-22")

).toDF("device", "purchase_date")

// this will be null in cases where the when clause doesn't match

df.withColumn("phone_size", when($"device".endsWith("phone"), element_at(split($"device","_"),1)))

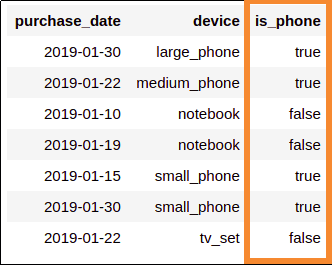

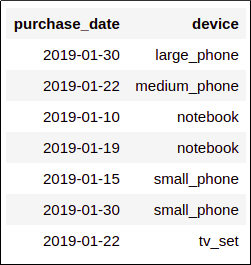

Original dataframe. Note that not

Original dataframe. Note that not all devices are phones

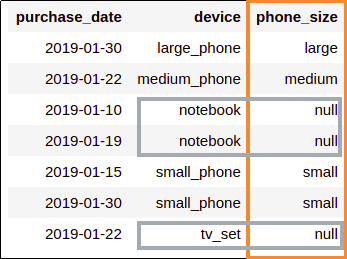

Since we didn't provide

Since we didn't provide an

otherwise clause, we get nulls where there was no match

Multiple when clauses

HEADS-UP: remember to use more restrictive conditions before less restrictive ones, like you would when using if/else if

You can use multiple when clauses, with or without an otherwise clause at the end:

Template:

df.withColumn("new_column_name",

when(<condition_1>, <value_for_condition_1>)

.when(<condition_2>, <value_for_condition_2>)

...

.otherwise(<value_when_no_conditions_matched>)) // optional otherwise at the end

Example: get shipping rates depending on the product

import org.apache.spark.sql.functions.when

val df = Seq(

("notebook","2019-01-19"),

("notebook", "2019-01-10"),

("small_phone", "2019-01-15"),

("small_phone", "2019-01-30"),

("tv_set", "2019-01-22"),

("large_phone", "2019-01-30"),

("medium_phone", "2019-01-22")

).toDF("device", "purchase_date")

// subsequent calls to when are called on the method itself

df.withColumn("shipping_rate",

when($"device"==="tv_set",30)

.when($"device".isin("large_phone","notebook"),20)

.when($"device".isin("small_phone","medium_phone"),10)

.otherwise(25)) // in case we sell other devices

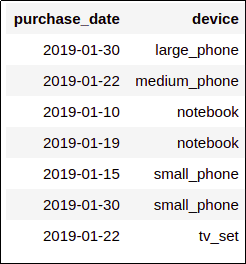

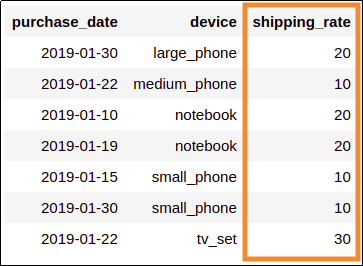

Source dataframe

Source dataframe

Added a new column, based on

Added a new column, based on what type of device if is